Project Loom? Better Futures? What's next for concurrent programming on the JVM

Adam Warski (@adamwarski), SoftwareMill

Scale By the Bay, November 12th, 2020

The problem

We want to concurrently run multiple processes.

Concurrency examples

- http requests

- processing messages from a queue

- integrating external services

- orchestrating workflows

- background jobs

- ...

Concurrency

We'd like to create a large number of processes in a cheap way.

- it's necessary

- it's easier

On the JVM / in Java

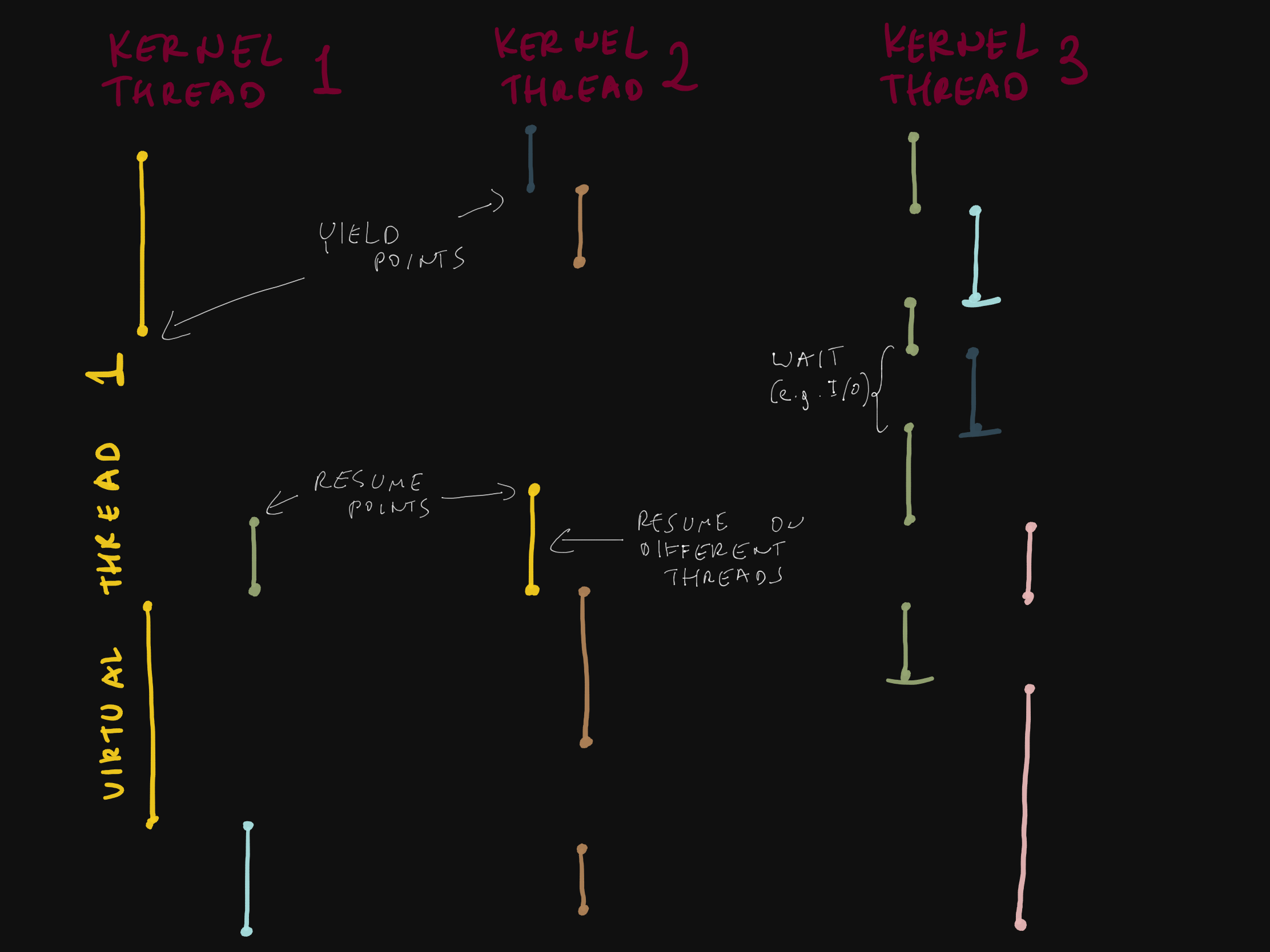

Currently, the basic unit of concurrency is a Thread.

Threads map 1-1 to kernel threads:

- expensive to create (time & memory)

- expensive to switch (time)

- limited in number (stack memory)

Who am I?

- Co-founder of SoftwareMill

- 15 years developing backend applications

- J2EE, Spring, manual concurrency, Akka, FP

-

Our expertise

- distributed systems, messaging, blockchain, ML/AI, big/fast data, ...

- Scala, Kafka, Cassandra consulting

-

Open-source contributor

sttp client, sttp tapir, quicklens, Hibernate Envers, ...

Current solutions

Thread pooling

- created upfront

- expensive to switch

- limited in number (stack memory)

- we have to deal with threads

Current solutions

CompletableFuture<T>

A value, representing a computation running in the background.

Eventually, the computation will yield a value of type T or end with an error.

- cheap to create

- cheap to switch

- unlimited in number (heap memory)

Composing futures

var f1 = CompletableFuture.supplyAsync(() -> "x");

var f2 = CompletableFuture.supplyAsync(() -> 42);

var f3 = f1.thenCompose(v1 ->

f2.thenApply(v2 -> "Result: " + v1 + " " + v2));

var f = CompletableFuture

.supplyAsync(() -> 42)

.thenCompose(v1 ->

CompletableFuture.supplyAsync(() -> "Got: " + v1));

Life used to be simple ...

boolean activateUser(Long userId) {

User user = database.findUser(userId);

if (user != null && !user.isActive()) {

database.activateUser(userId);

return true;

} else {

return false;

}

}

... not anymore

CompletableFuture<Boolean> activateUser(Long userId) {

return database.findUser(userId).thenCompose((u) -> {

if (u != null && !u.isActive()) {

return database.activateUser(userId)

.thenApply((r) -> true);

} else {

return CompletableFuture.completedFuture(false);

}

});

}

Problems with Future

- lost control flow

- lost context

- viral

Enter Project Loom

Project Loom aims to drastically reduce the effort of writing, maintaining, and observing high-throughput concurrent applications that make the best use of available hardware.

What is Loom?

Virtual threads

-

just like threads, but cheap to create and block

Thread t = Thread.startVirtualThread(() -> { ... }); - in other languages: fibers, goroutines, coroutines, processes, green threads ...

What is Loom?

Retrofit

- existing kernel-thread-blocking operations become non-blocking

- or rather, blocking virtual threads

What is Loom?

Retrofit examples

InputStream inputStream = null;

inputStream.read();

Writer writer = null;

writer.write("Hello, world");

Semaphore semaphore = null;

semaphore.acquire();

Thread.sleep(1000L);

What is Loom?

Also:

- continuations (low-level)

- tail-call elimination (later)

State of Loom

- started in 2017

- exploratory / research project

- currently in early access

- subject to change

We saw problems with sequential code

CompletableFuture<Boolean> activateUser(Long userId) {

return database.findUser(userId).thenCompose((u) -> {

if (u != null && !u.isActive()) {

return database.activateUser(userId)

.thenApply((r) -> true);

} else {

return CompletableFuture.completedFuture(false);

}

});

}

Back to the old days

boolean activateUser(Long userId) {

User user = database.findUser(userId);

if (user != null && !user.isActive()) {

database.activateUser(userId);

return true;

} else {

return false;

}

}

Too good to be true?

A virtual thread is still a thread.

Problems with threads

- communication

- orchestration

- interruption

Thread communication / synchronization

- semaphores

- locks

- queues / channels

Why not primitives?

- deadlocks

- race conditions

- all the reasons why concurrent programming is hard

Why futures, again?

- performance: well studied & documented

- programming model: computation as a value

One way to reduce concurrency bugs is avoiding direct thread usage.

Towards declarative concurrency

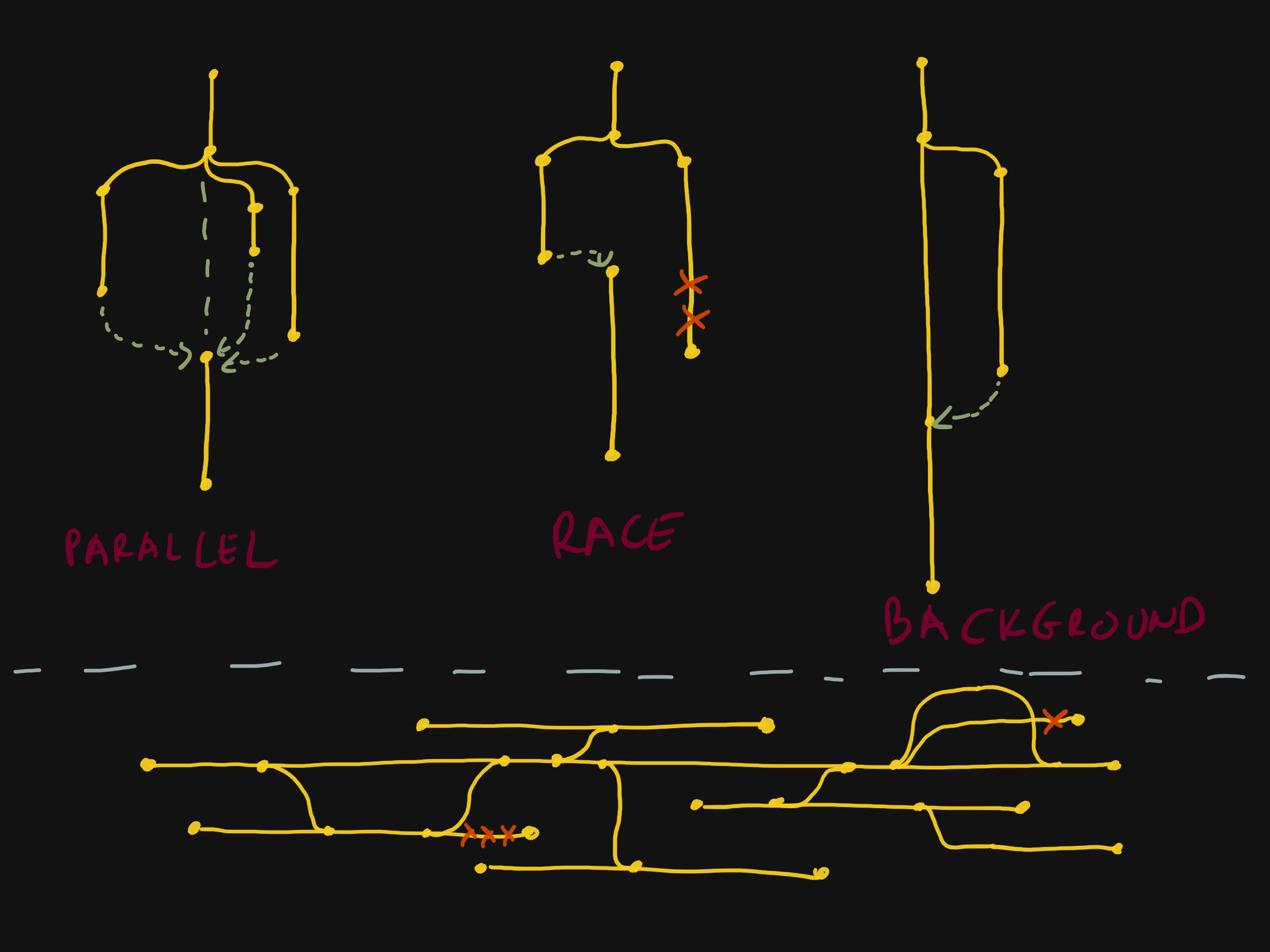

Futures give us sequential/parallel composition.

Using libraries: higher-level combinators

Fetching data in parallel: virtual threads

String sendHttpGet(String url) { ... }

String run(ExecutorService executor, long userId) {

AtomicReference<String> profileResult =

new AtomicReference<String>(null);

AtomicReference<String> friendsResult =

new AtomicReference<String>(null);

CountDownLatch done = new CountDownLatch(2);

Thread.startVirtualThread(() -> {

String result = sendHttpGet(

"http://profile_service/get/" + userId);

profileResult.set(result);

done.countDown();

});

Thread.startVirtualThread(() -> {

String result = sendHttpGet(

"http://friends_service/get/" + userId);

friendsResult.set(result);

done.countDown();

});

done.await();

return "Profile: " + profileResult.get() +

", friends: " + friendsResult.get();

}

Fetching data in parallel: futures

CompletableFuture<String> sendHttpGet(String url) { ... }

CompletableFuture<String> run(long userId) {

CompletableFuture<String> profileResult =

sendHttpGet("http://profile_service/get/" + userId);

CompletableFuture<String> friendsResult =

sendHttpGet("http://friends_service/get/" + userId);

return profileResult.thenCompose(profile ->

friendsResult.thenApply(friends ->

"Profile: " + profile + ", friends: " + friends));

}

Best of both worlds?

No style fits all use-cases

- Sometimes it's better to write "synchronous" / "blocking" code

- Sometimes it's better to operate of

Futures

Fetching data in parallel: speculation

String sendHttpGet(String url) { ... }

String run(long userId) {

CompletableFuture<String> profileResult = run(() ->

sendHttpGet("http://profile_service/get/" + userId);

);

CompletableFuture<String> friendsResult = run(() ->

sendHttpGet("http://friends_service/get/" + userId);

);

return "Profile: " + profileResult.get() +

", friends: " + friendsResult.get()));

}

Fetching data in parallel: more speculation

String sendHttpGet(String url) { ... }

String run(long userId) {

runParallel(

() -> sendHttpGet("http://profile_service/get/" + userId),

() -> sendHttpGet("http://friends_service/get/" + userId),

(profile, friends) -> "Profile: " + profile +

", friends: " + friends

);

}

Why Loom, again?

Loom gives us:

- tools to write non-blocking code in the "blocking" style

- retrofitting blocking APIs into non-blocking ones

Loom doesn't give us:

- a replacement for concurrency libraries

Another view

We can represent a process as:

- code: written using the "blocking" style

- value: which can be composed & orchestrated

Better Futures

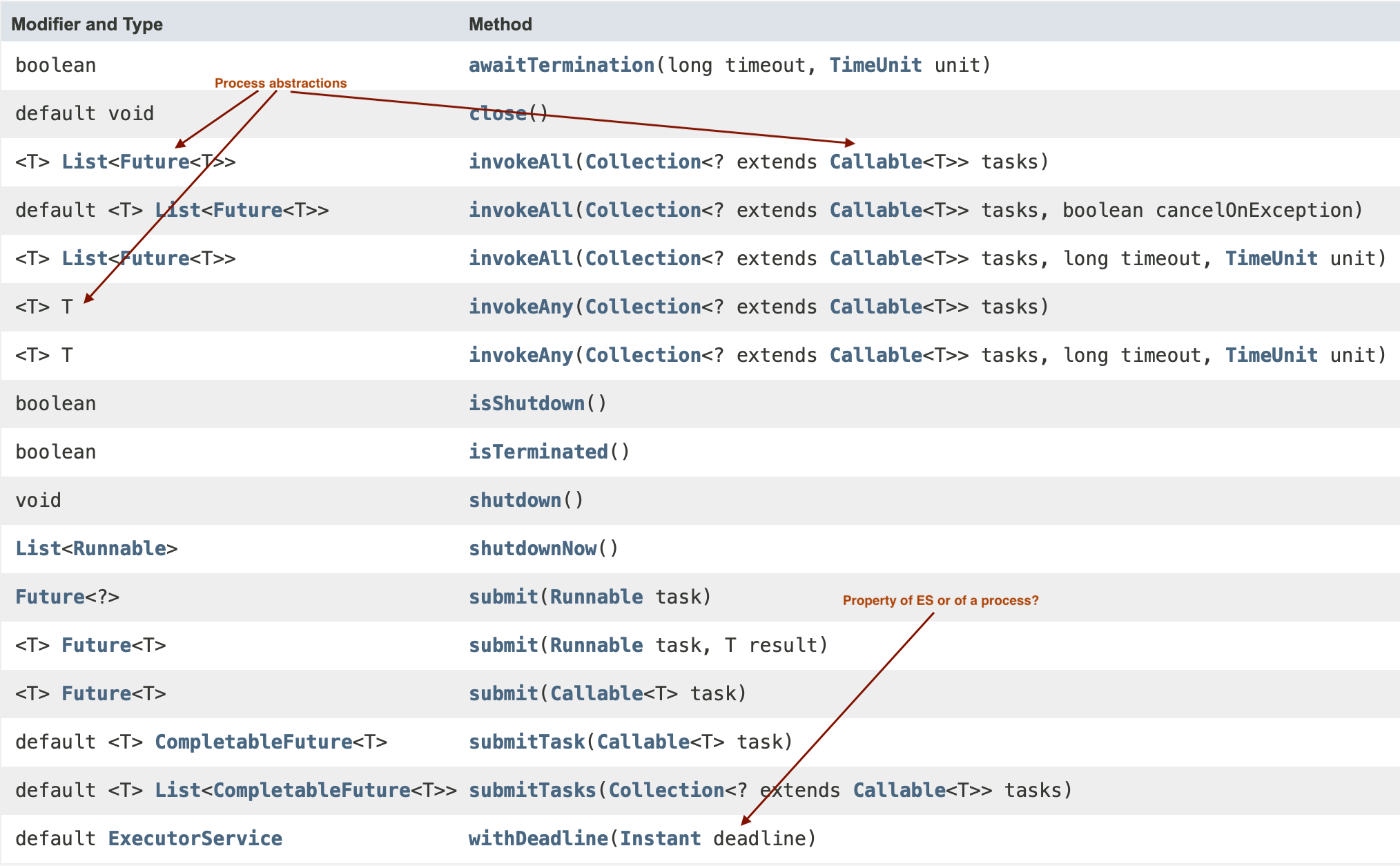

What kind of high-level operations might we expect?

retry, runAll, first, delay, ...

Often we operate on descriptions of computations, e.g. Callable<T>.

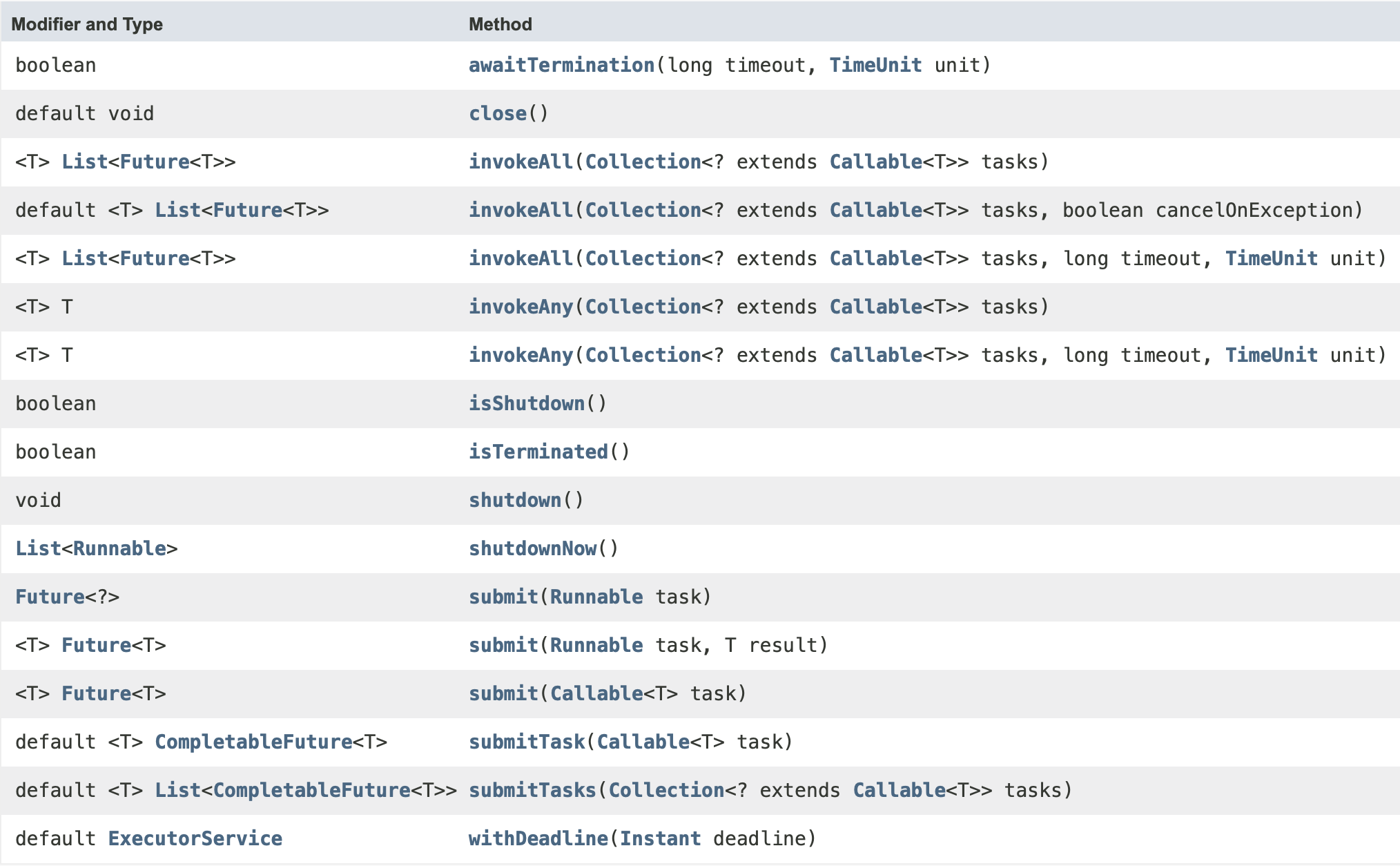

Improved ExecutorService

Improved ExecutorService

IOs

A Future is a value representing a running computation

(eager).

An IO is a value representing a description of a

computation (lazy).

What IO offers above Callable

cancellation / interruption / uninterruptible regions

separate process definition from sequencing

explicit concurrency

equational reasoning

composability

Where Loom shines: sequential logic

- majority of "business" code

- async is a technical detail

- readability is paramount

Processes represented as code.

Where IOs shine: orchestration

- niche, but business critical code

- hard to get right

- async is a first-class citizen

- readability is paramount

Processes represented as a value.

We've just scratched the surface

We haven't discussed:

- structured concurrency

- thread locals / scope values

- reactive programming

- streaming

- actors

We've just scratched the surface

All of these will be impacted by Loom.

Loom solves some of the problems.

But doesn't eliminate the need of declarative concurrency.

Further reading

Loom team:

Blogs which expand on what we've talked about today:

- Synchronous or asynchronous, and why wrestle with wrappers?

- Will Project Loom obliterate Java Futures?

- How Loom might fit cats-effect

Structured concurrency:

Twitter threads:

Thank you!

- This presentation

- Twitter (@adamwarski)

- Our blog

- Join us